Advice on sharing accessible content during the Coronavirus pandemic

13 minute read

Molly Watt explains how to make content accessible, with tools and techniques to make information shared during the Coronavirus pandemic more inclusive.

Studies show that 55% of the UK public access the news online. In light of the current climate, where public information, video explainers on social media, Gifs and infographics are themselves going viral, I'm sharing some tips and guidance on how to ensure your content is fully accessible to all. It has always been important for everyone to have equal access to world news and even more so in times of worry. Not only because it's a moral obligation but also a possible health risk for individuals to not be fully informed.

The importance of video captions

It's not just the hard of hearing/Deaf community that benefit from content being subtitled. This is just the starting point: the deaf community is comprised of over 350 million people worldwide, 11.5 million of which live in the UK.

Captions also improve comprehension for a variety of different audiences. For example, people who have

- English as a second language

- learning disabilities

- attention deficits

- situational access needs, such as - a busy train and they are unable to play sound

In today’s most concerning climate, more than ever, people are looking online for resources to rely upon. Studies have previously shown that 85% of users on social media are watching video content with no sound.

From an organisational and brand perspective, there are clear reasons for captioning content. Besides the moral obligation, it improves overall user experience and can increase search engine optimisation (SEO), as Google favours informative content.

In the US and EU, there are laws and accessibility directives that suggest if a company or public body is not creating accessible content, they are discriminating and could face legal action. But captions don’t need to be viewed as a legally required add-on because when done right they can be seen as part of a creative, responsible strategy that draws more people in.

Transcripts vs captions

Transcripts and captions are different things, and both important. Transcription is simply a text document, and unlike captions, there is no time information attached to the script. Captions are divided into text sections that are synchronised with video and audio content. Both 'verbatim' transcripts and captions should portray both speech and sound effects as well as identify different speakers.

Why transcription matters

Transcripts offer a great alternate for users who would benefit from having audio and video content scribed. Some assistive technologies used by the disabled population cannot access video and/or audio content. In this instance, transcripts would be invaluable to those users.

Radio shows and podcasts should not be overlooked. Transcripts make these services accessible. Transcripts have the same benefits captions do, such as improving comprehension, greater user interaction and again, they can help with SEO. As an example, read how transcription improved This American Life's SEO by over 6%.

Transcripts will not meet accessibility standards alone; however, they can contribute greatly by being:

- the first step for captioning

- the best solution to make radio and/or podcasts accessible

- easier to translate into other languages

- helpful for those with English as a second language, as well as people living with various cognitive and visual disabilities

- being more compatible with assistive technologies in a text document format.

Tips on how to do captioning

On YouTube

In YouTube’s Studio, you can add a transcription file or manually amend/edit your own captions.

There is an option to use 'automatic captioning', created by YouTube's in-built speech recognition technology. Note these captions are not always accurate and it's important to review before posting.

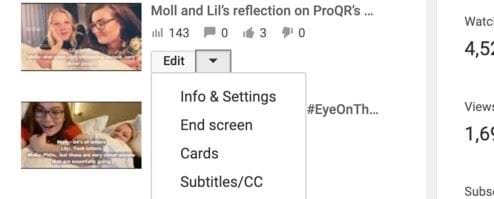

- Go to your Video Manager by clicking your account in the top right-hand corner > Creator Studio > Video Manager > Videos.

- Next to the video you want to add captions or subtitles to, click the drop-down menu next to the Edit button.

- Select Subtitles and CC.

- If automatic captions are available, you'll see Language (Automatic) in the 'Published' section to the right of the video.

- Review automatic captions and use the instructions to edit or remove any parts that haven't been properly transcribed.

Interestingly, YouTube now has in-built tools that can help and encourage users to have content translated. To do this, you can add translated video titles and descriptions and in YouTube’s words: "We'll show the title and description of the video in the right language, to the right viewers".

Closed vs open captions

YouTube captions are 'closed' meaning only activated when the user physically turns them on and off. If you are sharing informative content on social media platforms, having captions embedded on video at all times (open captions) would be more inclusive. Plus, according to Facebook research, open captions increase watch time by 12%.

Live captioning apps

Microsoft Teams

“With live captions, Teams can detect what's said in a meeting and present real-time captions for anyone who wants them.”

Microsoft Teams is full of inclusive design features that can really help users but most people probably don't think of using them

Firstly: live captions during meetings. Within Teams, under 'More options', you can 'Turn on live captions' to improve access and comprehension, to anyone who needs or wants captions. As you would expect with live captioning, there can be a slight delay, but the transcriptions themselves are pretty accurate. However, note that if the speaker has an accent or is speaking in a busy environment, the captioning will not be as precise. If you're working from home, as many of us are right now, consider background noise during meetings. You can also blur your background to allow people who also use lip reading to focus on your speech.

The second feature within Teams is the ability to translate content. This is more important than ever and will encourage and enable collaboration across the globe. Currently, live translation is only available in some languages, but once this changes, the accuracy of translation services can only get better.

Thirdly, Teams gives us the option to zoom in on content, and during presentations.

Dark mode is also available on Teams which can help users access content by reducing brightness, which can contribute to headaches and eye strain. Other Teams features such as voice messages, shortcut keys, focus time and speech to text voicemail, all of which have the potential to improve a user’s access to information. If you record a meeting, the video can be played back with captions using Microsoft Stream.

Microsoft has also embedded the fantastic Immersive Reader feature in Teams (users can access IR on Word Online, OneNote, Outlook, PowerPoint and Office Lens) which can improve the readability of Teams' messages. Within the Immersive Reader, there are various settings such as grammatical and reading level tools that can help the user understand the content better.

Microsoft PowerPoint options

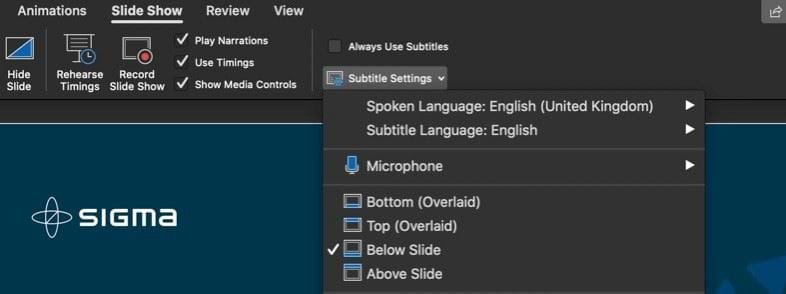

“Present with real-time, automatic captions or subtitles in PowerPoint.”

PowerPoint on Office 365 can live transcribe as you are presenting. On-screen captions will be presented in the language you are speaking or it can be translated into another language. Additionally, you can change the position, colour size of the captions to create more accessible captions for a wider audience.

Clipomatic

“Clipomatic is a smart video editor that turns everything you say into live captions. All you have to do is hit the magical record button, speak clearly and your words will appear as stylish captions right on your recording. Enhance the video with one of the artistic filters and wow your friends!”

Whether you are a famous vlogger, MP or a member of the public wanting to share an opinion, Clipomatic's user-friendly app is useful for a short video recorded on mobile devices. This speech recognition app will pick up on your live voice and transcribe what you are saying.

If you do not speak clearly or have background noise, or there is more than one person in the shot it can get tricky. You can, however, edit and correct the captions. The fonts and designs are a little restrictive, as you cannot change the size of the text or add a background to create better contrast, which is crucial for many to access the text. It is ideally suited to videos of a couple of minutes that you share on social media.

Captioning apps for pre-recorded content

MixCaptions

“Creators use MixCaptions as the final step to complete editing their videos. Simply open MixCaptions, choose your video, generate captions, and the app will automatically do the rest. You can also easily edit captions to your preference."

Unlike Clipomatic, this tool does not create live captioning. By adding a video (up to 10 minutes) into the app, it uses speech recognition to create the captions. This mode of captioning would probably be easier for longer clips, featuring more than one person.

MixCaptions gives the ability to edit transcription and add details, such as when the speaker changes. On a positive note, you can change the size and colour of the text, as well as adding a background to increase contrast. Contrast is important with video and text content, particularly where light and colours are present.

After you have used a certain amount of characters in transcribing, there are some in-app purchases in order to continue editing captions. Again, you can download MixCaptions from the App Store.

Amara Editor

"The Amara Editor is an award-winning caption and subtitle editor that’s free to use! It’s fun and easy to learn and encourages collaboration. Whether you’re an independent video creator, someone helping a friend access a video, or a grandchild translating a family clip for Grandma – the Amara Editor is the simplest way to make video accessible."

Like MixCaptions you need video content to make a start. But this time, you can use a URL (YouTube, Vimeo, MP4, WebM, OGG and MP3) to proceed with manual captions.

Amara is a desktop subtitling application that offers the ability to create your own captions, or purchase professional subtitling services from the Amara team.

Once signed up, you have the option to find a video URL to then add subtitles too. Using Amara’s Editor’s keyboard controls, you quickly get familiar with: play/pause, skip, insert line break you then begin typing up manual captions. After you have finished, you can edit to make sure the captions work accordingly with the video and audio content.

Image descriptions

What is alt text?

Users who are visually impaired and rely on a screen reader would read the alt text description (which would indicate it is an image within the alt text) aloud to the user. Alt text informs the user what is in the image, alt text can be fairly basic however useful enough to help understand the content. If an image fails to load, the alt text would show. If you're sharing images on Twitter, there is an Alt option you can use to add descriptive text. If you use a hashtag in your tweets, you should write them out using camel case, so the screen reader can identify the separate words, for example, #BeMoreInclusive.

What are image descriptions?

When writing image descriptions, here are some tips on what to describe from the Perkins School for the Blind:

- Placement of objects in the image

- Image style (painting, graph)

- Colours

- Names of people

- Clothes (if they are an important detail)

- Animals

- Placement of text

- Emotions, such as smiling

- Surroundings

Likewise, there are some things that should be left out of image descriptions. You don't need to describe:

- Descriptions of colours - no need to explain what red looks like

- Obvious details such as someone having two eyes, a nose, and a mouth

- Details that are not the focus of the picture

- Overly poetic or detailed descriptions

- Emojis

- Multiple punctuation marks

Automatic alt text

Automatic sounds great and can be a useful feature; however, as with speech recognition, the quality varies. Make sure you check the alt text accurately describes the content of images. If you get the chance, try to test your content with screen reader technology to get a feel for how it sounds when reading aloud.

Writing alt text

Always add meaningful descriptive language for alt text, here’s an example of what to do and what not to do:

What to avoid: a black dog

What's better: A cute Black Labrador wearing a Guide-dog harness, lying on the floor of a bus, looking up with big brown eyes to the camera.

By doing this you are not obstructing any viewers from comprehending the content. They get to enjoy it too.

Video descriptions

Most people will think the only way to make video accessible to people who are blind or visually impaired is to have audio descriptions. You're not wrong. However, there are steps to take when creating video content to make sure it is accessible:

- Audibly describe relevant visual information

- Regular pauses (allows any third-party transcriptions apps or services to catch up)

- Speak one at a time, and identify the person who is speaking

- If there's audience participation, use descriptive language e.g: If you've eaten lunch put your hand up. Molly has her hand up

Plain English

Plain English benefits the hard of hearing and Deaf community, those with low literacy skills, with cognitive impairments as well as over half of the world's population who are bilingual. It also helps someone understand a new topic they might want to learn more about.

The UK's average reading age is 9, which means papers such as The Sun and The Guardian write at a readability level of 8 and 14 years. This is to attract as many readers and to ensure the language used is easy to understand. The team at Content Design London offer some excellent guidance and training if you need help with this.

Inclusive content for everyone

Although accessibility has never been so important in this difficult time, we should not forget there are 1 billion people living with some form of disability worldwide, with 14.1 million living in the UK.

While we've considered those registered with a disability, we have not taken into account those who are not yet registered or do not identify as someone with a disability. Furthermore, let’s not forget our ageing population, which has increased globally in the last couple of decades. This requires us to think about digital inclusion in new ways, as it means we need to consider digital skills, confidence, affordability of equipment and internet access alongside specific assistive technology needs.

I hope these tips will help you to create content that is more inclusive and that you start to reach more people with your messaging during this difficult time. With the rapid and unexpected increase in home-working, please think about your disabled colleagues, who may not be able to adapt to new ways of working unless you put some of these accessibility measures in place for meetings and remote presentations.

We are being bombarded with information and although organisations like the NRCPD, ASLI and Royal Association for the Deaf, among others, lobbied for a sign language interpreter for the Prime Minister's public briefings, often accessibility is still an afterthought, sadly.

Please do what you can to share information publicly, on social media and across your teams in an inclusive way.